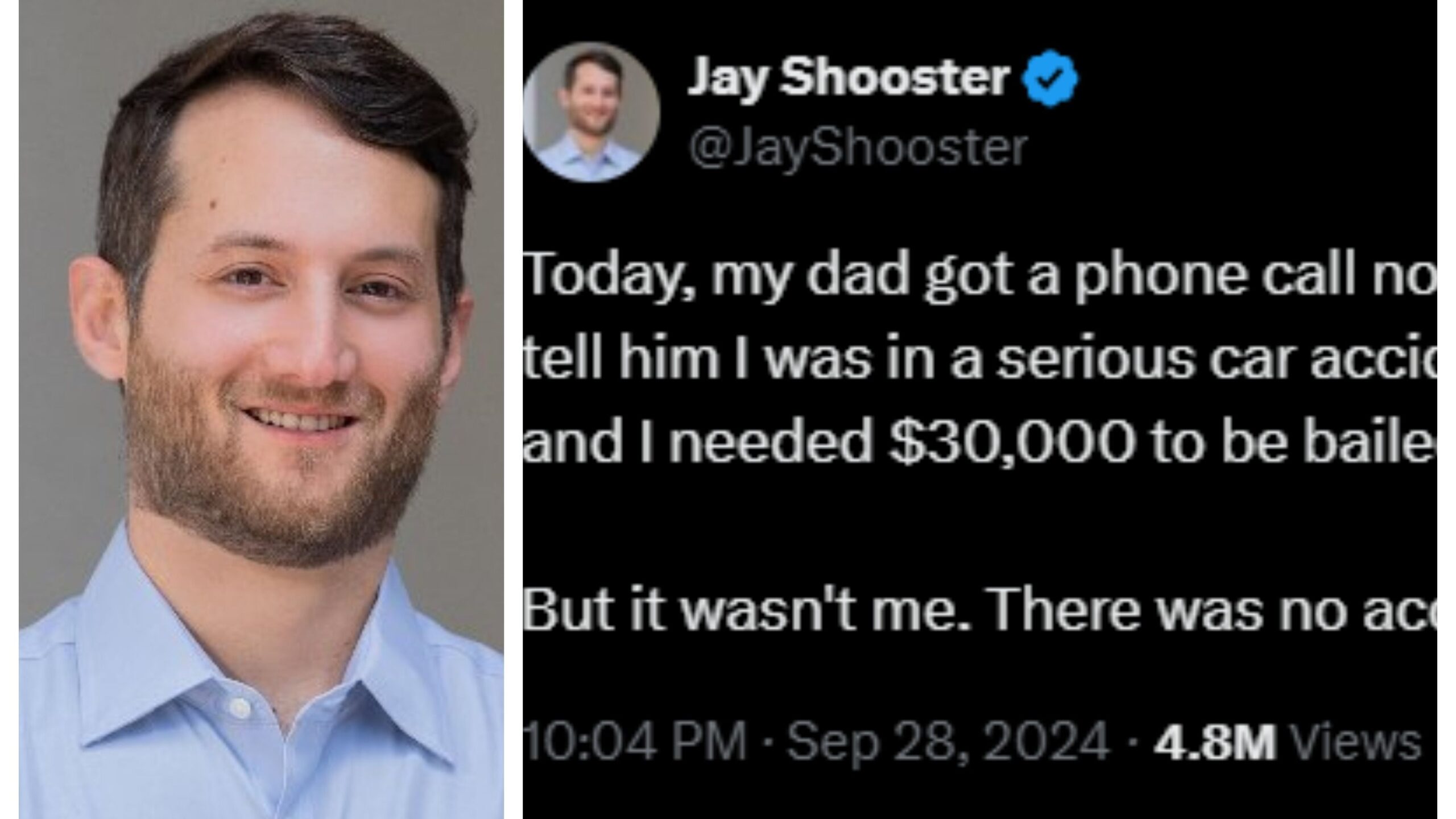

Viral news: Florida State House candidate Jay Shooster recently revealed a surprising experience on social media platform

Shooter’s parents received a call from someone who sounded identical to him, claiming to have been in a car accident, been arrested for DUI, and need $30,000 as bail. Shooter clarified that the voice was a clone created using just 15 seconds of his voice from a recent television appearance.

Despite being aware of these types of scams, Shooter was surprised that his own family almost fell for it. He stressed the need for awareness and stricter regulations on AI to avoid similar incidents. He also highlighted the potential complications of voice cloning technology, where people in real emergencies may have difficulty proving their identity to loved ones.

Many took to the comments section of Shooster’s post and noted the rise in AI-powered scams and the need for secret passphrases to verify identities. One user commented: “It’s probably not a coincidence. And yet it’s identity theft.” Another user shared a similar experience: “My dad received a call from my oldest son. However, he didn’t fall for the scam because he called him ‘grandpa,’ which is not what my son calls him.”

Disclaimer:

The information contained in this post is for general information purposes only. We make no representations or warranties of any kind, express or implied, about the completeness, accuracy, reliability, suitability or availability with respect to the website or the information, products, services, or related graphics contained on the post for any purpose.

We respect the intellectual property rights of content creators. If you are the owner of any material featured on our website and have concerns about its use, please contact us. We are committed to addressing any copyright issues promptly and will remove any material within 2 days of receiving a request from the rightful owner.