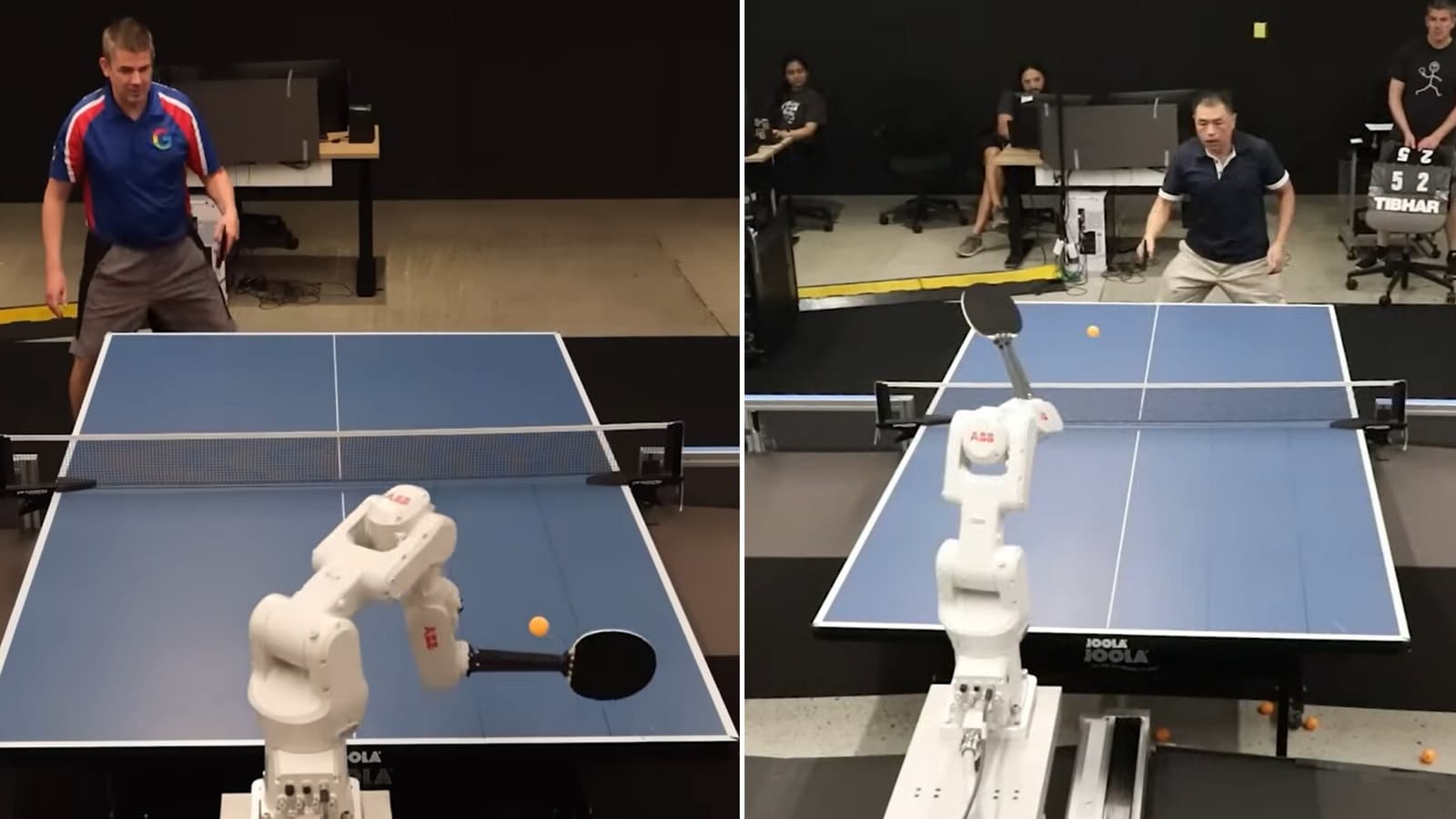

Google’s artificial intelligence company, DeepMind He has produced a robot capable of playing table tennis At an almost professional level. The unusual discovery was made during a recent investigation in which the AI-powered entity successfully took on several skilled human opponents before taking on some advanced players.

The Deep Mind The robot played competitive matches against table tennis players of varying skill levels (beginner, intermediate, advanced, and advanced+, as determined by a professional table tennis coach). Standard table tennis rules were followed with some modifications (because the robot cannot physically serve) while the humans played three games each against the machine. This, incidentally, marks the first case where a learned robot agent achieves amateur human-level performance in competitive table tennis.

“The robot won 45% of matches and 46% of games. Broken down by skill level, we see that the robot won all matches against beginners, lost all matches against advanced and advanced+ players, and won 55% of matches against intermediate players,” explained the Google DeepMind website.

According to participants in the DeepMind study, the robot was a “fun” and “engaging” opponent that they would like to face in future matches. However, advanced players were able to exploit weaknesses in its playing style – some noted that the robot was not good at handling underspin. It is pertinent to note here that table tennis can be a physically demanding sport that requires human players to undergo years of training to reach an advanced level of proficiency.

“Table tennis requires years of training for humans to master due to its complex low-level skills and strategic gameplay. A low-level skill that is not strategically optimal, but can be executed safely, might be a better choice. This distinguishes table tennis from purely strategic games like chess or Go,” DeepMind adds.

The development also comes just days after the company released results showing that its new AI models in development solved four of the six questions in the 2024 International Mathematical Olympiad. AlphaProof and AlphaGeometry 2 solved one question in a matter of minutes, but took up to three days to solve the rest — longer than the competition limit.

Disclaimer:

The information contained in this post is for general information purposes only. We make no representations or warranties of any kind, express or implied, about the completeness, accuracy, reliability, suitability or availability with respect to the website or the information, products, services, or related graphics contained on the post for any purpose.

We respect the intellectual property rights of content creators. If you are the owner of any material featured on our website and have concerns about its use, please contact us. We are committed to addressing any copyright issues promptly and will remove any material within 2 days of receiving a request from the rightful owner.